Amazon FBA sellers are turning to AI scraping tools to find profitable products, but Amazon’s sophisticated defenses make this incredibly risky. There’s a safer alternative that’s actually delivering better results for serious sellers.

Amazon FBA sellers constantly search for that competitive edge - the perfect product leads that will drive profits without the usual headaches. Many turn to scraping tools, especially AI-powered ones, hoping to automate their product research. But Amazon's fortress-like defenses make scraping far more challenging and risky than most sellers realize.

Amazon operates one of the most sophisticated anti-scraping systems on the internet. The e-commerce giant has invested heavily in blocking automated data collection attempts, making successful scraping incredibly difficult for the average seller. Most scraping attempts fail within minutes of starting, triggering immediate defensive responses that can escalate quickly.

The platform's defensive measures aren't just technical barriers; they're backed by strict terms of service that explicitly prohibit automated data collection. Violating these terms can result in permanent account suspension, IP blocking, and potential legal action. For FBA sellers whose livelihoods depend on their Amazon accounts, these risks far outweigh any possible benefits of scraped data.

Amazon's detection systems analyze every aspect of user behavior in real-time. Request patterns, user-agent strings, access frequencies, and even mouse movement patterns are constantly monitored to identify potential scraping activity. The algorithms can distinguish between human browsing behavior and automated requests with remarkable accuracy.

These detection systems operate at multiple layers, from network-level monitoring to application-specific behavioral analysis. They can identify coordinated scraping attempts across different IP addresses and detect even sophisticated rotating proxy setups. The system learns and adapts continuously, making it increasingly difficult for scrapers to find workarounds.

Amazon's Terms of Service explicitly prohibit automated data collection, and the company actively enforces these restrictions. Account terminations can occur, sometimes with limited warning or appeal options, depending on the severity of the violation. For sellers who have built their businesses around their Amazon accounts, this represents an existential threat.

The consequences extend beyond just losing access to scraping capabilities. Sellers may lose their entire FBA business, including inventory stored in Amazon warehouses, pending payments, and years of built-up seller metrics. The legal implications can also extend to civil action, particularly for commercial scraping operations.

Amazon regularly updates its website structure to maintain platform integrity and evade automated scraping tools. These changes can occur frequently, creating unreliable data streams for scrapers that work perfectly one day but fail completely the next.

The dynamic nature of Amazon's site structure means that maintaining functional scrapers requires constant updates and monitoring. Most sellers lack the technical expertise and time investment needed to keep their scraping tools working consistently. This leads to gaps in data collection and potential losses when scrapers fail during critical sourcing periods.

AI-powered scraping tools have shown impressive capabilities in circumventing traditional anti-scraping measures. These systems can solve CAPTCHA challenges, mimic human browsing patterns, and adapt to website changes more effectively than traditional scrapers. They can process multimedia content, extract text from images, and understand data context in ways that seem almost human-like.

The technology behind AI scraping continues to advance rapidly, with machine learning models becoming increasingly sophisticated at mimicking natural user behavior. Some AI scrapers can even adjust their request timing and patterns based on the website's response, creating more convincing human-like interactions that are harder for detection systems to identify.

Despite their technical sophistication, AI scrapers still violate Amazon's terms of service and create the same legal risks as traditional scraping methods. The advanced capabilities of AI tools might actually increase liability, as they enable more extensive data collection that could be seen as more egregious violations.

The technical complexity of AI scraping tools also creates new vulnerabilities. These systems require significant computational resources, are expensive to maintain, and often operate as black boxes, making it difficult for users to understand how data is collected or validated fully. When AI scrapers fail or produce incorrect data, the consequences can be more severe given the scale of their operations.

Manual vetting processes involve trained professionals who understand the nuances of Amazon's marketplace that automated systems miss. Human reviewers can identify intellectual property risks, brand restrictions, and pricing anomalies that could lead to account suspensions or legal issues. They recognize patterns that indicate potential problems before sellers invest in inventory.

Amazon Online Arbitrage experts at FBA Lead List say this human oversight extends to understanding market dynamics that scrapers cannot detect. Experienced vetters can spot price manipulation, identify products involved in seller rotations, and recognize items that appear profitable but carry hidden risks.

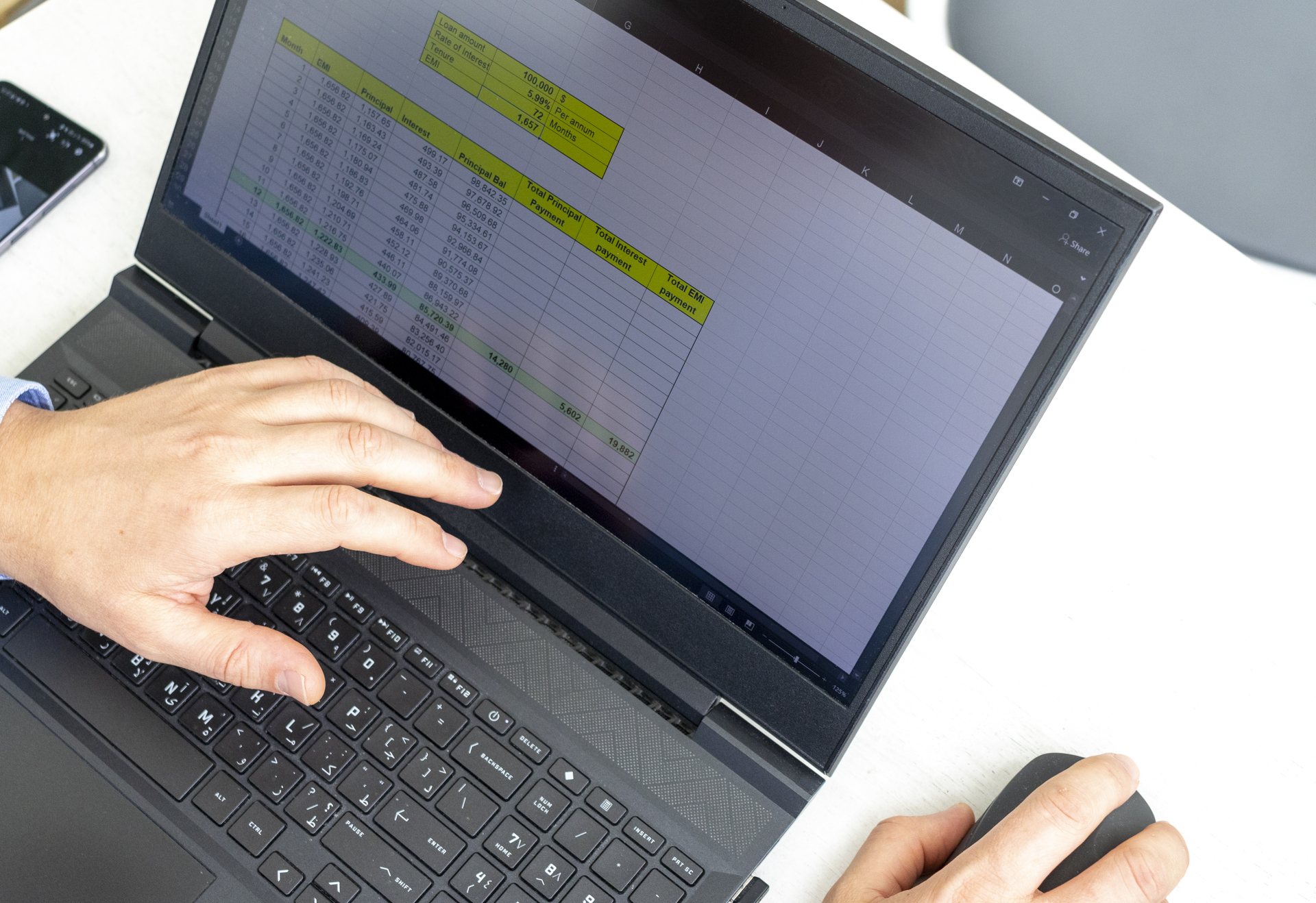

Manual vetting involves verifying profit margins, sales velocity, and return on investment calculations. Unlike scraped data that might be outdated or inaccurate, manually vetted leads include current market analysis and realistic profit projections based on recent sales data and market conditions.

This verification process includes checking for seasonal trends, competitor analysis, and supply chain considerations that affect profitability. Manual vetters can provide context about why certain products are profitable now and whether that profitability is likely to continue, giving sellers confidence in their purchasing decisions.

One of the biggest problems with scraped lead lists is oversaturation - when hundreds or thousands of sellers receive the same product recommendations simultaneously. This creates a race to the bottom in pricing and eliminates profit margins almost immediately. Manual vetting services typically limit subscriber numbers to prevent this oversaturation.

Strategic selection also means choosing products that can support multiple sellers without immediate price competition. Manually vetted leads often focus on products with sufficient demand and a margin cushion to remain profitable even with some competitive pressure. This approach protects subscribers from the common scenario where scraped leads become unprofitable within days of distribution.

Manual vetting provides context and market intelligence that scraped data cannot deliver. Expert reviewers understand category trends, seasonal patterns, and emerging opportunities that require human insight to identify. They can spot products that are about to trend up or warn about categories experiencing declining demand.

This analysis includes actionable insights about sourcing strategies, optimal purchase quantities, and timing considerations that maximize profitability. Manual vetters often identify rabbit trail opportunities - where one product lead opens up entire categories or brands worth pursuing for additional profits.

The performance difference between manually vetted Amazon leads and scraped data becomes clear in real-world results. Many subscribers to hand-vetted services report higher success rates, better profit margins, and fewer account issues compared to those relying on automated scraping tools. The human element in lead generation provides reliability and context that technology alone cannot match.

Manual vetting services also adapt to market changes more effectively than automated systems. For example, when Amazon updated its fee structure in 2025, experienced analysts quickly adjusted their profit calculations and selection criteria to maintain lead quality. This adaptability ensures that subscribers continue receiving relevant, profitable leads even as market conditions shift.

The cost-benefit analysis often favors manual vetting for most sellers. According to industry feedback, while scraping tools may seem less expensive initially, the hidden costs of failed leads, account risks, and time spent validating questionable data often exceed the cost of professionally vetted services. Sellers using manual services spend more time sourcing profitable inventory and less time dealing with problematic leads.